- Prerequisites

- Step 1: Prepare a container image for the AWS Fargate task

- Step 2: Push the container image to a registry

- Step 3: Create an EC2 instance for GitLab Runner

- Step 4: Install and configure GitLab Runner on the EC2 instance

- Step 5: Create an ECS Fargate cluster

- Step 6: Create an ECS task definition

- Step 7: Test the configuration

- Clean up

- Configure a private AWS Fargate task

-

Troubleshooting

No Container Instances were found in your clustererror when testing the configuration- Metadata

file does not existerror when running jobs connection timed outwhen running jobsconnection refusedwhen running jobsssh: handshake failed: ssh: unable to authenticate, attempted methods [none publickey], no supported methods remainwhen running jobs

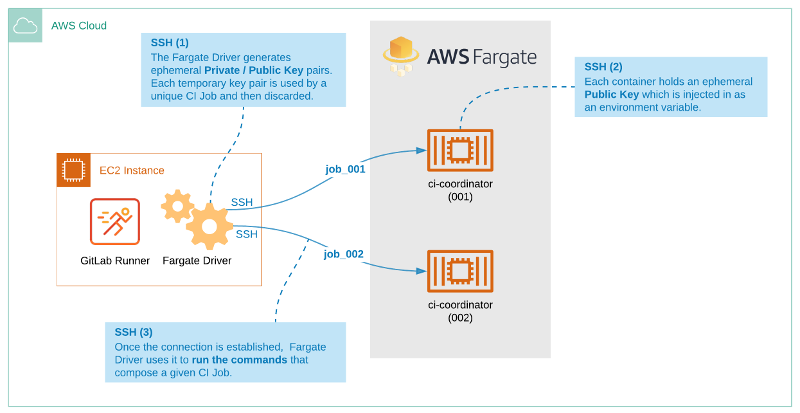

Autoscaling GitLab CI on AWS Fargate

The GitLab custom executor driver for AWS Fargate automatically launches a container on the Amazon Elastic Container Service (ECS) to execute each GitLab CI job.

After you complete the tasks in this document, the executor can run jobs initiated from GitLab. Each time a commit is made in GitLab, the GitLab instance notifies the runner that a new job is available. The runner then starts a new task in the target ECS cluster, based on a task definition that you configured in AWS ECS. You can configure an AWS ECS task definition to use any Docker image, so you have complete flexibility in the type of builds that you can execute on AWS Fargate.

This document shows an example that’s meant to give you an initial understanding of the implementation. It is not meant for production use; additional security is required in AWS.

For example, you might want two AWS security groups:

- One used by the EC2 instance that hosts GitLab Runner and only accepts SSH connections from a restricted external IP range (for administrative access).

- One that applies to the Fargate Tasks and that allows SSH traffic only from the EC2 instance.

Additionally, for any non-public container registry your ECS Task will either need IAM permissions (for AWS ECR only) or will require Private registry authentication for tasks for non-ECR private registries.

You can use CloudFormation or Terraform to automate the provisioning and setup of your AWS infrastructure.

image: keyword in your .gitlab-ci.yml file. This configuration can result in multiple instances of runner manager or in large build containers. AWS is aware of the issue and GitLab is tracking resolution. You might consider creating an EKS cluster instead by following the official AWS EKS Blueprints.Prerequisites

Before you begin, you should have:

- An AWS IAM user with permissions to create and configure EC2, ECS and ECR resources.

- AWS VPC and subnets.

- One or more AWS security groups.

Step 1: Prepare a container image for the AWS Fargate task

Prepare a container image. You will upload this image to a registry, where it will be used to create containers when GitLab jobs run.

- Ensure the image has the tools required to build your CI job. For example, a Java project requires

a

Java JDKand build tools like Maven or Gradle. A Node.js project requiresnodeandnpm. - Ensure the image has GitLab Runner, which handles artifacts and caching. Refer to the Run stage section of the custom executor docs for additional information.

- Ensure the container image can accept an SSH connection through public-key authentication.

The runner uses this connection to send the build commands defined in the

.gitlab-ci.ymlfile to the container on AWS Fargate. The SSH keys are automatically managed by the Fargate driver. The container must be able to accept keys from theSSH_PUBLIC_KEYenvironment variable.

View a Debian example that includes GitLab Runner and the SSH configuration. View a Node.js example.

Step 2: Push the container image to a registry

After you create your image, publish the image to a container registry for use in the ECS task definition.

- To create a repository and push an image to ECR, follow the Amazon ECR Repositories documentation.

- To use the AWS CLI to push an image to ECR, follow the Getting Started with Amazon ECR using the AWS CLI documentation.

- To use the GitLab Container Registry, you can use the

Debian or NodeJS

example. The Debian image is published to

registry.gitlab.com/tmaczukin-test-projects/fargate-driver-debian:latest. The NodeJS example image is published toregistry.gitlab.com/aws-fargate-driver-demo/docker-nodejs-gitlab-ci-fargate:latest.

Step 3: Create an EC2 instance for GitLab Runner

Now create an AWS EC2 instance. In the next step you will install GitLab Runner on it.

- Go to https://console.aws.amazon.com/ec2/v2/home#LaunchInstanceWizard.

- For the instance, select the Ubuntu Server 18.04 LTS AMI. The name may be different depending on the AWS region you selected.

- For the instance type, choose t2.micro. Click Next: Configure Instance Details.

- Leave the default for Number of instances.

- For Network, select your VPC.

- Set Auto-assign Public IP to Enable.

- Under IAM role, click Create new IAM role. This role is for test purposes only and is not secure.

- Click Create role.

- Choose AWS service and under Common use cases, click EC2. Then click Next: Permissions.

- Select the check box for the AmazonECS_FullAccess policy. Click Next: Tags.

- Click Next: Review.

- Type a name for the IAM role, for example

fargate-test-instance, and click Create role.

- Go back to the browser tab where you are creating the instance.

- To the left of Create new IAM role, click the refresh button.

Choose the

fargate-test-instancerole. Click Next: Add Storage. - Click Next: Add Tags.

- Click Next: Configure Security Group.

- Select Create a new security group, name it

fargate-test, and ensure that a rule for SSH is defined (Type: SSH, Protocol: TCP, Port Range: 22). You must specify the IP ranges for inbound and outbound rules. - Click Review and Launch.

- Click Launch.

- Optional. Select Create a new key pair, name it

fargate-runner-managerand click the Download Key Pair button. The private key for SSH is downloaded on your computer (check the directory configured in your browser). - Click Launch Instances.

- Click View Instances.

- Wait for the instance to be up. Note the

IPv4 Public IPaddress.

Step 4: Install and configure GitLab Runner on the EC2 instance

Now install GitLab Runner on the Ubuntu instance.

- Go to your GitLab project’s Settings > CI/CD and expand the Runners section. Under Set up a specific Runner manually, note the registration token.

- Ensure your key file has the right permissions by running

chmod 400 path/to/downloaded/key/file. -

SSH into the EC2 instance that you created by using:

ssh ubuntu@[ip_address] -i path/to/downloaded/key/file -

When you are connected successfully, run the following commands:

sudo mkdir -p /opt/gitlab-runner/{metadata,builds,cache} curl -s "https://packages.gitlab.com/install/repositories/runner/gitlab-runner/script.deb.sh" | sudo bash sudo apt install gitlab-runner -

Run this command with the GitLab URL and registration token you noted in step 1.

sudo gitlab-runner register --url "https://gitlab.com/" --registration-token TOKEN_HERE --name fargate-test-runner --run-untagged --executor custom -n -

Run

sudo vim /etc/gitlab-runner/config.tomland add the following content:concurrent = 1 check_interval = 0 [session_server] session_timeout = 1800 [[runners]] name = "fargate-test" url = "https://gitlab.com/" token = "__REDACTED__" executor = "custom" builds_dir = "/opt/gitlab-runner/builds" cache_dir = "/opt/gitlab-runner/cache" [runners.custom] volumes = ["/cache", "/path/to-ca-cert-dir/ca.crt:/etc/gitlab-runner/certs/ca.crt:ro"] config_exec = "/opt/gitlab-runner/fargate" config_args = ["--config", "/etc/gitlab-runner/fargate.toml", "custom", "config"] prepare_exec = "/opt/gitlab-runner/fargate" prepare_args = ["--config", "/etc/gitlab-runner/fargate.toml", "custom", "prepare"] run_exec = "/opt/gitlab-runner/fargate" run_args = ["--config", "/etc/gitlab-runner/fargate.toml", "custom", "run"] cleanup_exec = "/opt/gitlab-runner/fargate" cleanup_args = ["--config", "/etc/gitlab-runner/fargate.toml", "custom", "cleanup"] -

If you have a self-managed instance with a private CA, add this line:

volumes = ["/cache", "/path/to-ca-cert-dir/ca.crt:/etc/gitlab-runner/certs/ca.crt:ro"]Learn more about trusting the certificate.

The section of the

config.tomlfile shown below is created by the registration command. Do not change it.concurrent = 1 check_interval = 0 [session_server] session_timeout = 1800 name = "fargate-test" url = "https://gitlab.com/" token = "__REDACTED__" executor = "custom" -

Run

sudo vim /etc/gitlab-runner/fargate.tomland add the following content:LogLevel = "info" LogFormat = "text" [Fargate] Cluster = "test-cluster" Region = "us-east-2" Subnet = "subnet-xxxxxx" SecurityGroup = "sg-xxxxxxxxxxxxx" TaskDefinition = "test-task:1" EnablePublicIP = true [TaskMetadata] Directory = "/opt/gitlab-runner/metadata" [SSH] Username = "root" Port = 22- Note the value of

Cluster, as well as the name of theTaskDefinition. This example showstest-taskwith:1as the revision number. If a revision number is not specified, the latest active revision is used. - Choose your region. Take the

Subnetvalue from the runner manager instance. -

To find the security group ID:

- In AWS, in the list of instances, select the EC2 instance you created. The details are displayed.

- Under Security groups, click the name of the group you created.

- Copy the Security group ID.

In a production setting, follow AWS guidelines for setting up and using security groups.

- If

EnablePublicIPis set to true, the public IP of the task container is gathered to perform the SSH connection. - If

EnablePublicIPis set to false:- The Fargate driver uses the task container’s private IP. To set up a connection when set to

false, the VPC’s Security Group must have an inbound rule for Port 22 (SSH), where the source is the VPC CIDR. - To fetch external dependencies, provisioned AWS Fargate containers must have access to the public internet. To provide public internet access for AWS Fargate containers, you can use a NAT Gateway in the VPC.

- The Fargate driver uses the task container’s private IP. To set up a connection when set to

- The port number of the SSH server is optional. If omitted, the default SSH port (22) is used.

- For more information about the section settings, see the Fargate driver documentation.

- Note the value of

-

Install the Fargate driver:

sudo curl -Lo /opt/gitlab-runner/fargate "https://gitlab-runner-custom-fargate-downloads.s3.amazonaws.com/latest/fargate-linux-amd64" sudo chmod +x /opt/gitlab-runner/fargate

Step 5: Create an ECS Fargate cluster

An Amazon ECS cluster is a grouping of ECS container instances.

- Go to

https://console.aws.amazon.com/ecs/home#/clusters. - Click Create Cluster.

- Choose Networking only type. Click Next step.

- Name it

test-cluster(the same as infargate.toml). - Click Create.

- Click View cluster. Note the region and account ID parts from the

Cluster ARNvalue. - Click Update Cluster button.

- Next to

Default capacity provider strategy, click Add another provider and chooseFARGATE. Click Update.

Refer to the AWS documentation for detailed instructions on setting up and working with a cluster on ECS Fargate.

Step 6: Create an ECS task definition

In this step you will create a task definition of type Fargate with a reference

to the container image that you are going to use for your CI builds.

- Go to

https://console.aws.amazon.com/ecs/home#/taskDefinitions. - Click Create new Task Definition.

- Choose FARGATE and click Next step.

- Name it

test-task. (Note: The name is the same value defined in thefargate.tomlfile but without:1). - Select values for Task memory (GB) and Task CPU (vCPU).

- Click Add container. Then:

- Name it

ci-coordinator, so the Fargate driver can inject theSSH_PUBLIC_KEYenvironment variable. - Define image (for example

registry.gitlab.com/tmaczukin-test-projects/fargate-driver-debian:latest). - Define port mapping for 22/TCP.

- Click Add.

- Name it

- Click Create.

- Click View task definition.

SSH_PUBLIC_KEY environment variable

in containers with the ci-coordinator name only. You must

have a container with this name in all task definitions used by the Fargate

driver. The container with this name should be the one that has the

SSH server and all GitLab Runner requirements installed, as described

above.Refer to the AWS documentation for detailed instructions on setting up and working with task definitions.

Refer to the AWS documentation Amazon ECS task execution IAM role for information on the ECS service permissions required to launch images from an AWS ECR.

Refer to the AWS documentation Private registry authentication for tasks for information on having ECS authenticate to private registries including any hosted on a GitLab instance.

At this point the runner manager and Fargate Driver are configured and ready to start executing jobs on AWS Fargate.

Step 7: Test the configuration

Your configuration should now be ready to use.

-

In your GitLab project, create a simple

.gitlab-ci.ymlfile:test: script: - echo "It works!" - for i in $(seq 1 30); do echo "."; sleep 1; done - Go to your project’s CI/CD > Pipelines.

- Click Run Pipeline.

- Update the branch and any variables and click Run Pipeline.

image and service keywords in your .gitlab-ci.yml file are ignored.

The runner only uses the values specified in the task definition.Clean up

If you want to perform a cleanup after testing the custom executor with AWS Fargate, remove the following objects:

- EC2 instance, key pair, IAM role and security group created in step 3.

- ECS Fargate cluster created in step 5.

- ECS task definition created in step 6.

Configure a private AWS Fargate task

To ensure a high level of security, configure a private AWS Fargate task. In this configuration, executors use only internal AWS IP addresses, and allow outbound traffic only from AWS so that CI jobs run on a private AWS Fargate instance.

To configure a private AWS Fargate task, complete the following steps to configure AWS and run the AWS Fargate task in the private subnet:

- Ensure the existing public subnet has not reserved all IP addresses in the VPC address range. Inspect the CIRD

address ranges of the VPC and subnet. If the subnet CIRD address range is a subset of the VPC’s CIRD address range,

skip steps 2 and 4. Otherwise your VPC has no free address range, so you must delete and

recreate the VPC and the public subnet:

- Delete your existing subnet and VPC.

-

Create a VPC

with the same configuration as the VPC you deleted and update the CIRD address, for example

10.0.0.0/23. -

Create a public subnet with the same configuration as the subnet you deleted. Use a CIRD address that is a subset

of the VPC’s address range, for example

10.0.0.0/24.

-

Create a private subnet with the same

configuration as the public subnet. Use a CIRD address range that does not overlap the public subnet range, for

example

10.0.1.0/24. - Create a NAT gateway, and place it inside the public subnet.

- Modify the private subnet routing table so that the destination

0.0.0.0/0points to the NAT gateway. -

Update the

farget.tomlconfiguration:Subnet = "private-subnet-id" EnablePublicIP = false UsePublicIP = false -

Add the following inline policy to the IAM role associated with your fargate task (the IAM role associated with fargate tasks is typically named

ecsTaskExecutionRoleand should already exist.){ "Statement": [ { "Sid": "VisualEditor0", "Effect": "Allow", "Action": [ "secretsmanager:GetSecretValue", "kms:Decrypt", "ssm:GetParameters" ], "Resource": [ "arn:aws:secretsmanager:*:<account-id>:secret:*", "arn:aws:kms:*:<account-id>:key/*" ] } ] } - Change the “inbound rules” of your security group to reference the security-group itself. In the AWS configuration dialogue:

- Set

Typetossh. - Set

SourcetoCustom. - Select the security group.

- Remove the exiting inbound rule that allows SSH access from any host.

- Set

For more information, see the following AWS documentation:

- Amazon ECS task execution IAM role

- Amazon ECR interface VPC endpoints (AWS PrivateLink)

- Amazon ECS interface VPC endpoints

- VPC with public and private subnets

Troubleshooting

No Container Instances were found in your cluster error when testing the configuration

error="starting new Fargate task: running new task on Fargate: error starting AWS Fargate Task: InvalidParameterException: No Container Instances were found in your cluster."

The AWS Fargate Driver requires the ECS Cluster to be configured with a default capacity provider strategy.

Further reading:

- A default capacity provider strategy is associated with each Amazon ECS cluster. If no other capacity provider strategy or launch type is specified, the cluster uses this strategy when a task runs or a service is created.

- If a

capacityProviderStrategyis specified, thelaunchTypeparameter must be omitted. If nocapacityProviderStrategyorlaunchTypeis specified, thedefaultCapacityProviderStrategyfor the cluster is used.

Metadata file does not exist error when running jobs

Application execution failed PID=xxxxx error="obtaining information about the running task: trying to access file \"/opt/gitlab-runner/metadata/<runner_token>-xxxxx.json\": file does not exist" cleanup_std=err job=xxxxx project=xx runner=<runner_token>

Ensure that your IAM Role policy is configured correctly and can perform write operations to create the metadata JSON file in /opt/gitlab-runner/metadata/. To test in a non-production environment, use the AmazonECS_FullAccess policy. Review your IAM role policy according to your organization’s security requirements.

connection timed out when running jobs

Application execution failed PID=xxxx error="executing the script on the remote host: executing script on container with IP \"172.x.x.x\": connecting to server: connecting to server \"172.x.x.x:22\" as user \"root\": dial tcp 172.x.x.x:22: connect: connection timed out"

If EnablePublicIP is configured to false, ensure that your VPC’s Security Group has an inbound rule that allows SSH connectivity. Your AWS Fargate task container must be able to accept SSH traffic from the GitLab Runner EC2 instance.

connection refused when running jobs

Application execution failed PID=xxxx error="executing the script on the remote host: executing script on container with IP \"10.x.x.x\": connecting to server: connecting to server \"10.x.x.x:22\" as user \"root\": dial tcp 10.x.x.x:22: connect: connection refused"

Ensure that the task container has port 22 exposed and port mapping is configured based on the instructions in Step 6: Create an ECS task definition. If the port is exposed and the container is configured:

- Check to see if there are any errors for the container in Amazon ECS > Clusters > Choose your task definition > Tasks.

- View tasks with a status of

Stoppedand check the latest one that failed. The logs tab has more details if there is a container failure.

Alternatively, ensure that you can run the Docker container locally.

ssh: handshake failed: ssh: unable to authenticate, attempted methods [none publickey], no supported methods remain when running jobs

The following error occurs if an unsupported key type is being used due to an older version of the AWS Fargate driver.

Application execution failed PID=xxxx error="executing the script on the remote host: executing script on container with IP \"172.x.x.x\": connecting to server: connecting to server \"172.x.x.x:22\" as user \"root\": ssh: handshake failed: ssh: unable to authenticate, attempted methods [none publickey], no supported methods remain"

To resolve this issue, install the latest AWS Fargate driver on the GitLab Runner EC2 instance:

sudo curl -Lo /opt/gitlab-runner/fargate "https://gitlab-runner-custom-fargate-downloads.s3.amazonaws.com/latest/fargate-linux-amd64"

sudo chmod +x /opt/gitlab-runner/fargate